In this webinar we discussed how to expose data efficiently in terms of performance and governance. We reviewed some interesting patterns and technologies which makes it easier for your users to consume the data previously processed in your pipelines.

For any Big Data architecture, the main goal is to make available data to their users which will be very hard to use in traditional architectures because of size or latency requirements. In this AMA, we cover how to expose data efficiently in terms of performance and governance. We review some interesting patterns and technologies which make it easier for your users to consume the data previously processed in your pipelines.

At the end of the session, we held a public live Q&A session open to all.

This session was recorded live as part of our weekly Ask Me Anything sessions, live every Wednesday.

Join us for future Ask Me Anything sessions! More details on our website.

APIs and Architecture Overview

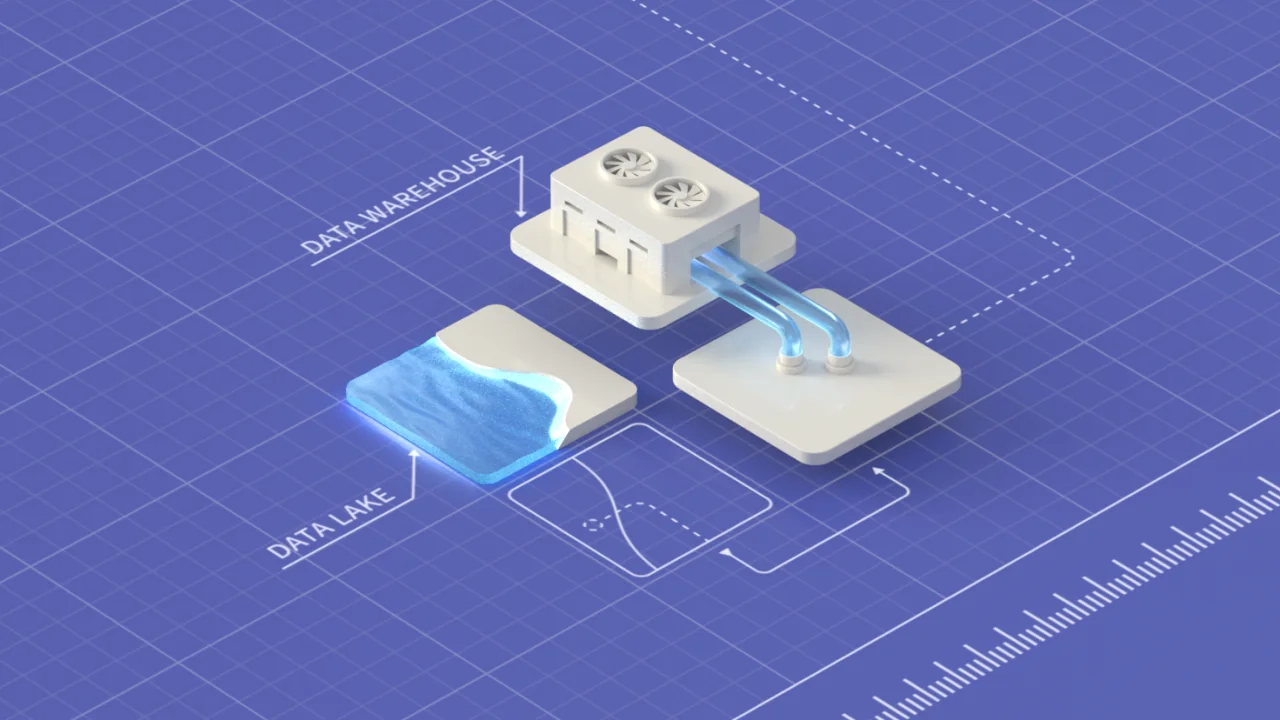

We have sources generating data, such as our client's browser, the devices, the IoT, all the services, databases, and even chains of captured events. Including through MQTT brokers or any other IoT protocols.In the previous Ask Me Anything sessions we have covered the Message Queuing Tier with tools like Kafka, the Analysis Tier with tools like Spark or Kafka Streams, Data Stores with tools like ElasticSearch.

In this session, we will focus on the Collection Tier and then the Data Access Tier. Both these steps are essential to provide a good service to our customers because it's what the systems, generating information, will see and with what the users consuming it are going to interact. If you have perfect central steps, but you cannot make the information easily available to consumers, it can be highly frustrating.

Collection Tier

Irrespective of your use case or the industries you're working with, one vital thing is flexibility in the collection tier: adapting to different sources of information.

In some cases the sources send data to our systems through APIs and in other cases, we need to retrieve the data from an API they provide. In any case, we need to have some nodes to retrieve and store that information safely and efficiently in the message queue tier. In the collection tier, we have many possible patterns: Request/response pattern, Publish/subscribe pattern, Stream pattern, Request/acknowledge pattern, & One-way pattern.

Here are some of the patterns we've found more interesting:

Load Balancer

It's a good way to scale this tier by placing a load balancer in front of it and not the only scale and not only that if we for whatever reason we lose nodes processing data because we have more than one the others can continue working and they produce the information to see any old touch in our services so that's a very important thing.Buffering Layer

The other thing and this is part of the pattern we see here with this example is to have a buffering layer in this tier. Basically, what we do is if we have a system producing information at a very high rate, our nodes are not able to process that information in time to put it in the message queue tier. What we can do is have something which is not just storing information and giving our nodes time to put it in the message queue tier. That buffering layer maybe something like Kafka, in some cases where if you use in front of databases to store information and to group the database from the services in just in the database for example it's very typical in elastic architecture tools where we will have a maximum limit of digestion in the database so we put Kafka in front of it and we can make sure we never lose any messages queue and we don't put a lot of pressure on the database.We have many possibilities here so we need flexibility and performance. Flexibility to retrieve information from different sources and performance where we make sure never to lose message queue and do that in the cheapest possible way.

Data Access Tier

This tier enables our consumers to see and retrieve the information we store in our big data systems. It's very important and it's going to depend on what consumers we have. It may be other devices consuming that information, it may be other services, it may be a dashboard where the users can connect through a website to see graphics of the data evolution, and so on. Depending on what type of services are consuming this data, there are different patterns we see in the previous year we can use.And there are also different protocols and different technologies we can use, such as REST API, GraphQL, Webhooks, HTTP Long Polling, Server-sent Events, WebSockets, etc. This list is not exhaustive, but these are what you're going to find in most big data architecture, and there are good reasons for that.

REST API: OpenAPI & API First

Probably the most popular way to build an API is to do a REST API. It's standard, based on JSON. So basically it's heavily based on HTTP STANDARD. It's interesting because it's not only used in BigData services but also in website services in general. Even at the enterprise-level, it's heavily used right now. Also, it's used on mobile and so on.

PROS

So what is nice about REST APIs is that it's used everywhere, and the frameworks are really good and so it the support. Making it an easy way to develop an API. When you want to provide customers at some point - in bulk, from time to time, to retrieving the information to use it may be to generate graphics, maybe to generate a report, maybe to launch all the services and other things.

If you have an infinite flow of information where customers need to receive information all the time, the REST API does not give you a good way to do that. So what you have to do is spalling so that your customers have each periodically, they have to invoke your API and that may be not so efficient, depending on the throughput system, the refresh rate the customers/client needs to have insistence so for both options there are other possibilities other protocols we can use.

GraphQL

GraphQL is a query language for APIs and a runtime for fulfilling those queries with your existing data. GraphQL provides a complete and understandable description of the data in your API, gives clients the power to ask for exactly what they need and nothing more, makes it easier to evolve APIs over time, and enables powerful developer tools.PROS

Basically, graphQL is a lot more flexible than the REST API. Allowing us to do room queries with very powerful queries through HTTP APIs. So it’s really good when you need to provide an API that is more like an SQL query or something like that because it's a lot similar to the REST API which is more restricted in that sense.

CONS

GraphQL is a bit more complex to implement in terms of performance and evolution. The thing here is to be able to run general queries. There is some coupling between the APIs and the bucket systems to build that. So when you want to evolve or change your data store, the changes you're going to make in GraphQL implementation will be significant and maybe not even so transparent to the customer as they are when using REST API.

The thing here is the requirements from our customers to consume that information and also gain. We need to make it perform well. Because they typically want to access the information as soon as possible. That means we should reduce latency; we also need to make sure it’s easy to maintain. And easy to evolve/scale.

Conclusion

There are several ways to build an API with its pros and cons. If it is not a real-time API then REST & GraphQL are the most popular. It is important to understand the use case to choose what is better for you because they aren’t a silver bullet that should be used for everything.Join us for future Ask Me Anything sessions! More details on our website.