Optimizing OpenSearch clusters with time-series data means optimizing the shard size and count. When ingesting data using AWS Data Firehose, this can be achieved using Rollover Indices. Read on for a guide on how to set this up.

When deciding how to organize data into indices in OpenSearch, the first consideration is what kind of data would be indexed into them - so, for example, data with different schemas would be indexed into separate indices (or separate index patterns).

The next step is to consider how much data would be indexed into each index. This needs to be optimized at a lower level - the shards, which are data units that the indices are composed of. Creating large shards might make them more difficult to read, and could be the cause of imbalance in the cluster, while having a lot of small shards can increase overhead and reduce performance.

For time-series data, optimizing the shard size and count is usually managed using Data Streams, as explained in this article. However, that is not possible when ingesting documents using AWS Data Firehose. Using Data Streams requires indexing requests to use the value "create" for the parameter "op_type", which basically means providing a guarantee that the data is append-only. As Firehose uses the op_type value of "index", we need some other solution.

Fortunately, there's an alternative method that offers similar benefits without the op_type limitation - rollover indices. This approach uses an alias to manage a sequence of indices: data is written only to the most recent index, while all indices under the alias can be read from. When an index reaches a certain size, it’s rolled over and a new one is created, keeping shard sizes consistent and optimal.

To achieve this, we need to:

- Create a lifecycle policy

- Create the first index and the alias

- Configure Firehose to write to the alias

Examples for each of those steps follow. The following applies to both non-managed and AWS Opensearch. For Elasticsearch the same basic idea applies with a different syntax for the policy.

Creating an ISM policy to manage rollover

In order for this to work, we would first have to create a policy that would allow us to rollover the indices. Here's a simple example:

PUT _plugins/_ism/policies/logs

{

"policy": {

"default_state": "rollover",

"states": [

{

"name": "rollover",

"actions": [

{

"rollover": {

"min_primary_shard_size": "30gb"

}

}

],

"transitions": []

}

],

"ism_template": {

"index_patterns": ["logs-patternname-rollover*"],

"priority": 100

}

}

}

Creating the first index and creating the alias

Unlike Data Streams, the rollover indices method requires us to create the first of the rollover indices (sometimes referred to as "bootstrapping" in this context) and assign it to the alias, like so:

PUT logs-patternname-rollover-000001

{

"aliases": {

"logs-patternname-rollover": {

"is_write_index": true

}

}

}

Configure Firehose to write to the rollover alias

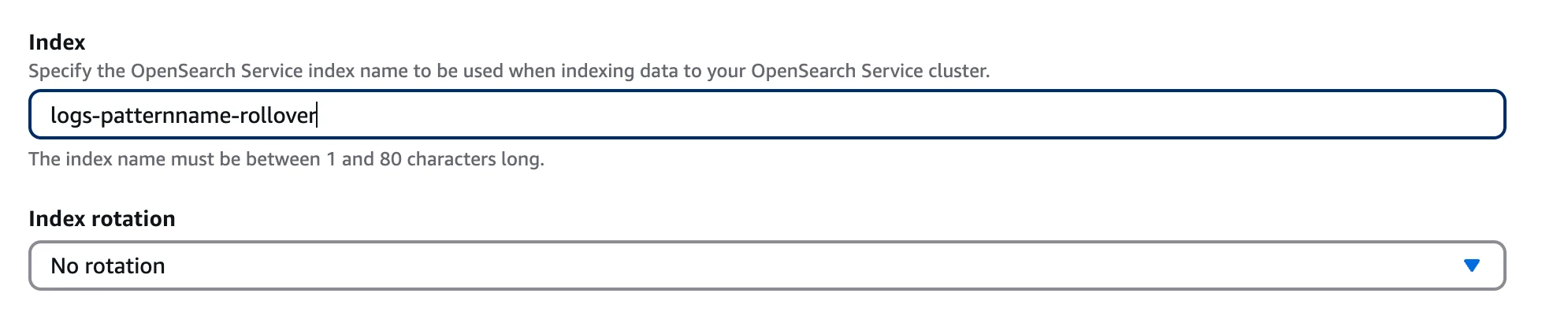

In Firehose, make sure you set the index field to point to the name of the alias, and that no index rotation is selected.

Moving to rollover indices

Assuming you already have an index or a set of indices in the index pattern, switching to rollover indices involves:

- Making sure the new and old indices can both exist under the same pattern you're using to query the data, and that the new indices are a subpattern of that pattern (so, we can use "logs-patternname*" as the search pattern for the indices, and then "logs-patternname-rollover*" for the new ones as explained above).

- Create a policy that would match the new subpattern, with a higher priority than the existing policies for the pattern.

- Configure Firehose to write to the rollover alias.

Other considerations

Some additional considerations need to be taken into account based on your scenario - feel free to contact us if you require assistance with any related question:

- Determining the ideal shard size for your requirement (though 30gb is a good default for many cases)

- Moving the data to another tier following the rollover

- Deciding on an overall sharding strategy - the use of rollover indices is just one component of this

- Evaluating whether Firehose is the correct ingestion tool for your scenario

- Considering whether the rollover strategy is a good fit for your data (e.g. Non timeseries or frequently updated data might not be)