Explore all the best practices for Apache Iceberg table maintenance to keep performance high and costs low.

Do you have an Apache Iceberg table on S3 or other blob storage and want to maintain it with optimal performance and cost in mind? This article is for you.

Let’s start at the beginning.

An Iceberg snapshot contains one or more manifest files. A manifest file, on the other hand, is a subset of a snapshot that contains a list of data or deletes files.

Since Iceberg tracks each data file in a table, larger data files result in less metadata in manifest files and fewer requests to S3.

What about small data files? Having a lot of them impacts both read and maintenance performance. When reading, you might come across issues like poor performance and growing costs. Small data files eventually make performing maintenance harder.

Keeping this in mind while maintaining your Iceberg tables is crucial. Continue reading to explore all the best practices for table maintenance to keep performance high and costs low.

4 best practices for Iceberg table maintenance

1. Run manifest file merges

Iceberg merges manifest files on writes to speed up query planning and eliminate redundant data files.

In some cases, it’s a good idea to manually trigger rewriting manifests to recalculate the metadata and compact it. Otherwise, you don’t need to run merges that often.

There are certain conditions where Iceberg merges manifest files during writes, but it doesn't do it all the time. Let's say we write into "hourly" partitions and we write every minute. In the usual case, we end up with around 60 write operations being done to the table and 60 manifests written. Iceberg wouldn't merge those manifest files.

However, if we wrote once an hour, we would have a single manifest for this partition and wouldn't need to do that.

2. Compact data files

Running compaction tasks can boost the performance of your Iceberg tables. Always balance the optimization level with your requirements. Here are a few best practices to get you on the right track.

Use the right compaction strategy

binPack – binPack is the default rewrite approach that rewrites smaller files to the target size and reconciles any deleted files. It doesn’t carry out any further optimizations, such as sorting. Since this approach is the quickest, it can be your best choice if the time needed to finish the compaction is a factor.

- Pros: Provides the fastest compaction jobs.

- Cons: No data clusters.

Sort – Using the sort technique, you can cluster the data for improved efficiency in addition to optimizing the file size. The advantages of min/max filtering will be even larger (fewer files to scan, the faster) when similar data is clustered together since fewer files may contain relevant data for a query.

- Pros: Read times can be significantly reduced when data is grouped by frequently asked fields.

- Cons: Compared to binPack, this means longer compaction jobs.

zOrder – Z-order clustering is a good pick for multi-dimensional table optimization. Contrary to multi-column sorting, it assigns equal weights to each column being sorted. It doesn't prioritize one sort over another.

- Pros: This can even speed up read times if queries frequently use filters across several fields.

- Cons: Compared to binPack, compaction jobs take longer to complete.

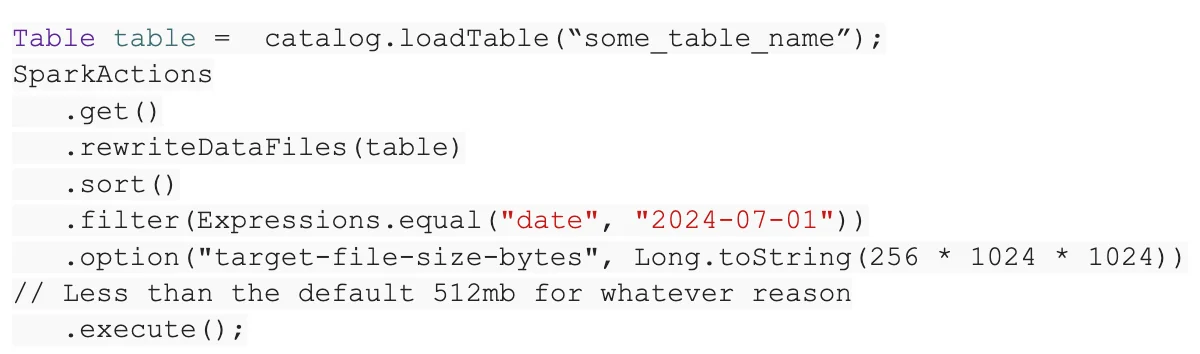

Run compaction on parts of the table

You might not want to conduct compaction on the whole table, especially if you pick the binPack method. To prevent tiny files, consider compacting only the data produced most recently. You can do that by filtering your table by its partitions.

Work on multiple file groups at once

You can parallelize the compaction process by running it on several file groups at the same time. This makes the process much faster.

Companies running on the cloud usually spin up dedicated compute to perform these types of maintenance. They can "squeeze out" extra resources from their compute instances if they increase the number of file groups.

This is only relevant if we don't compact frequently enough and have a large backlog to deal with.

Configuration options for compaction jobs

Here are three examples of configurations you can use:

target-file-size-bytes This will determine the output files' desired size. This will use the write.target.file-size-bytes attribute of the table by default, which has a 512 MB default value.

max-concurrent-file-group-rewrites Sets the maximum number of file groups that can be written at once.

max-file-group-size-bytes A file group's maximum size is not one single file. When a worker is writing a certain file group and the partition is greater than the RAM available to it, this setting should allow the worker to split the partition into many file groups that can be written concurrently.

3. Deal with expiring old snapshots

Consider the storage implications of retaining data that might not be part of the table's present state if your system has the notion of table snapshots. Since Iceberg snapshots reuse original, unaltered data files, this issue is already partially remediated.

Still, data files must frequently be overwritten or removed to create fresh snapshots. These files are only useful for older snapshots. They mount up over time and increase the overhead associated with preserving older snapshots permanently.

Here are a few steps you can take to handle expiring old snapshots:

- Remove all snapshots that are older than your time travel window

- Avoid deleting live data

- Run it frequently to clear up old snapshots and all files that are no longer referenced once those snapshots are removed

This approach is relatively cost-efficient since it just uses metadata to find files to clear.

4. Remove orphan files

But what happens if there are data files in the table directory without Iceberg or any snapshot references? These are the so-called orphan files.

Orphan files might appear in a table's directory for a variety of reasons. For example, it can happen when writes fail or when files fail to delete during maintenance jobs.

It's not easy to identify which files are orphans; you must take into account every table snapshot.

By using the remove_orphan_files procedure, Iceberg allows the removal of orphan files. The process yields a table with a single column called orphan_file_location that contains the complete file path of each orphan file removed. By default, the operation only removes orphan files older than three days as a safety measure to avoid accidentally deleting files that are currently being written.

You don’t need to run this frequently; use this approach when things go wrong with your table. This approach doesn't improve performance but successfully prevents you from getting billed for unused storage.

Summary

When dealing with Iceberg table maintenance, you have optimization levers available, from compacting files to removing old snapshots. The choice of your maintenance approach depends on various factors, including query patterns, table size, performance needs, storage type, regulatory requirements, security rules, and more.

Ultimately, Iceberg's table maintenance processes reflect the community's declarative data engineering approach and the idea that data professionals should be empowered to focus more on the actual data and less on its technical upkeep.

To learn more about Iceberg best practices, check out this webinar: Flink and Iceberg: A Powerful Duo for Modern Data Lakes

Contact us to gain world-class support for all your Iceberg needs.