Go beyond theory with our weekly AI digest. Learn how HNSW vector search works, master prompt engineering, and get production tips for LLM evaluati

Have you ever wondered what separates a stunning AI demo from a system that truly works at scale? The gap between the two is filled with brilliant algorithms and hard-won engineering wisdom. This week, we're diving deep into both sides of that equation. We'll explore the intricate mechanics that power today's most advanced AI-from the lightning-fast vector searches that feel like magic to the complex diffusion processes that turn random noise into breathtaking images. But understanding the theory is only half the battle; putting it into practice is where real value is created.

This digest bridges that gap, moving from the engine room to the control panel. We'll demystify the HNSW algorithm with an unexpected guide-Finding Nemo-and then shift to the front lines of AI implementation. Discover how a single prompt modification can swing model accuracy by nearly 9%, see the surprisingly simple architecture that enables scalable AI agents, and learn how industry leaders like Booking.com are using LLMs to evaluate other LLMs in production. This isn't just a summary of what's new; it's a playbook of actionable insights, designed to help you build smarter, more reliable, and more efficient AI systems.

The video explains the HNSW algorithm by comparing it to Marlin's search for Nemo. It addresses the core problem of transforming a slow, O(n) linear search into a highly efficient O(log n) logarithmic search, which is essential for modern vector databases.

The analogy illustrates key concepts: the ocean is the multi-dimensional vector space, and fish are data points clustered by similarity. "Small World Networks" are shown through characters like Dory and Crush, who act as long-range connections. HNSW formalizes this with a hierarchical graph structure. Higher, sparse layers provide coarse, long-distance navigation (highways), while lower, dense layers allow for precise, local searching (local roads).

The search process is a greedy algorithm that starts at the highest layer, moves closer to the target, and then drops to lower layers for refinement. A practical demo using Redis Vector Sets shows how a similarity search can correctly reconstruct the path of characters Marlin meets on his journey to find Nemo, validating the algorithm's effectiveness.

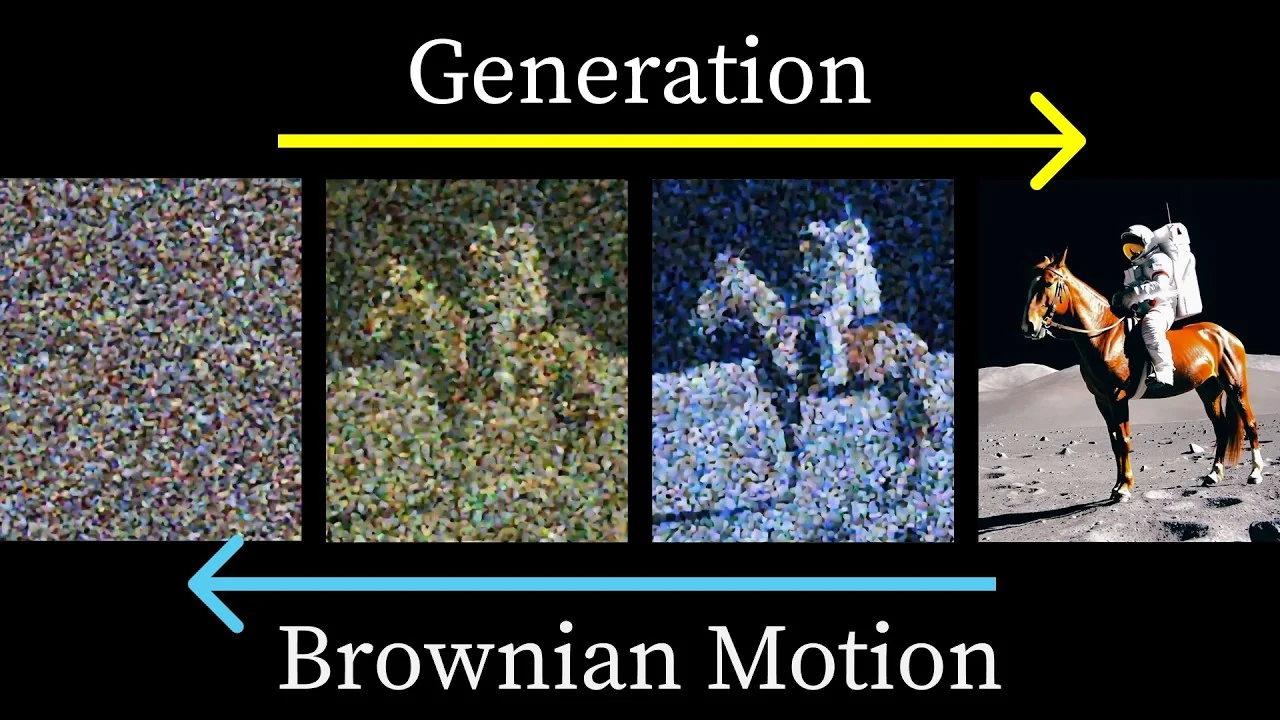

AI image/video models use a process called diffusion, which is analogous to reversing Brownian motion in a high-dimensional space. The process begins with pure random noise, which is iteratively refined by a transformer model into a coherent image or video by following a learned, time-varying vector field.

The connection between text prompts and visual content is enabled by models like CLIP (Contrastive Language–Image Pre-training). CLIP learns a shared "embedding space" where text and images are represented as vectors. This space allows for conceptual arithmetic (e.g., 'man with hat' - 'man' ≈ 'hat'), forming the basis for text-guided generation.

Diffusion models like DDPM are trained to predict the total noise added to an image, a more stable objective than single-step denoising. The generation is steered using "conditioning" (providing the text vector) and significantly enhanced with "classifier-free guidance," which amplifies the difference between a prompted and unprompted output to improve prompt adherence. Faster, deterministic generation is possible with methods like DDIM, which use an ordinary differential equation to achieve the same result in fewer steps.

Evaluating generative AI is complex due to the lack of a single "ground truth." Booking.com tackles this with an LLM-as-a-judge framework for scalable, automated assessment, using a powerful LLM to score application outputs. This allows for "continuous monitoring of the performance of a GenAI application in production... with minimal human involvement."

Success hinges on a high-quality "golden dataset" (ideally 500-1000 examples) that mirrors production data, created using a rigorous annotation protocol. The judge-LLM is then developed through iterative prompt engineering to replicate human judgment with high accuracy.

Actionable Takeaway: Use a strong model (e.g., GPT-4) as the judge during development for a quality baseline, then deploy a more cost-effective version for large-scale production monitoring to balance quality and operational cost.

Prompt engineering is a critical factor in LLM performance, directly influencing accuracy, speed, and cost. An experiment analyzing product review sentiment with gpt-4.1-mini demonstrated that modifying only the prompt can alter accuracy by as much as 8.8% (from 84% to 92.8%). This highlights that even with advanced models, the prompt is a key differentiator between mediocre and outstanding results.

The experiment found clear trade-offs. The fastest prompt (~0.54s/sample) was simple and direct, while the most accurate (~0.77s/sample) asked the model for a confidence level, which improved results even without using the score. Interestingly, complex techniques like "Zero Shot Chain of Thought" were slow (3.35s) and inaccurate, whereas a simpler "self-talk" prompt ranked second in accuracy.

The core insight is that "the prompt is a dial, not a switch." The optimal prompt depends on your primary goal. Key takeaways include defining your optimization target (e.g., quality vs. latency), experimenting with multiple prompts against an evaluation set, and iterating to find the best balance for your specific task.

Effective AI agent systems rely on a simple two-tier architecture: a primary agent for context and orchestration, and stateless subagents for specific tasks. This model proves complexity is the enemy. The most critical rule is that subagents must be stateless, like pure functions. This design enables massive parallel execution-turning 5-minute jobs into 30-second tasks-and simplifies caching, saving up to 40% on API calls.

For orchestration, Sequential Pipeline and MapReduce patterns handle 95% of use cases. Communication must be structured with clear objectives. Key principles include failing fast, ensuring observable execution, and matching the AI model's complexity to the task. Avoid common pitfalls like deep hierarchies, state creep, and overly "smart" agents.

The most actionable advice is to start simple, test subagents in isolation, and remember that "agents are tools, not magic." They excel at specific tasks but rely on you to define that work explicitly.