This week's digest covers fixing RAG's blind spots, using SLMs as the future of Agentic AI, fine-tuning LLMs with RL, and building agents with the human-like ReAct framework.

What separates a clever AI demo from a truly intelligent system that learns, reasons, and adapts? As the initial hype around large language models matures, the focus is shifting from raw capability to refined application. The new frontier isn't just about making models bigger; it's about making them smarter, more reliable, and deeply integrated into the workflows where they can create the most value.

This week's digest dives into the practical engineering of intelligence, exploring how to bridge the gap between AI potential and real-world performance. Inside, we unpack the critical methods for building more sophisticated AI. We'll challenge conventional wisdom by showing why user engagement data, not static evaluations, is the key to fixing RAG's biggest blindspot. We then dive deep into advanced reinforcement learning techniques that align models with human values with surgical precision.

You'll also discover the ReAct framework, a powerful method that teaches AI to "think" before it "acts," and learn how one team is using AI to transform scattered code review feedback into a living set of team standards. Each piece provides a clear, actionable blueprint for creating the next generation of intelligent systems.

RAG's big blindspot

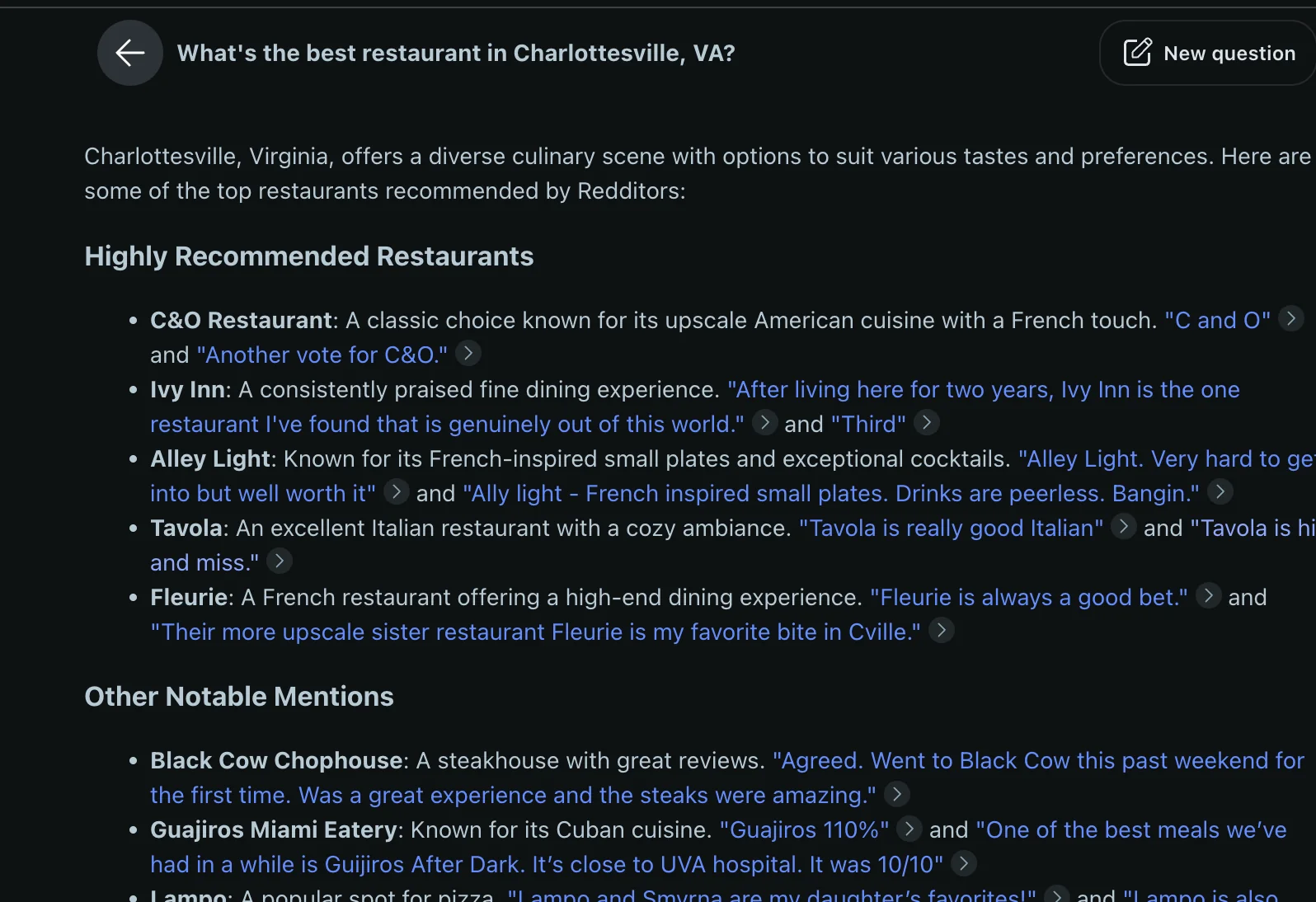

BlogThe RAG community overemphasizes human/LLM evaluations while neglecting crucial user engagement data like clicks and conversions. This gap arises because RAG's unique, conversational queries are difficult to aggregate, unlike traditional search queries. The author argues that "user's subjective, revealed preferences often matter more than some objective, 'wikipedia level' truth," as real-world interactions uncover intent that static evaluations miss.

To capture these insights, Doug Turnbull proposes three actionable takeaways. First, build "useful actions" (e.g., bookmark, share) into the UI to gather implicit feedback. Second, aggregate semantically similar but non-identical queries probabilistically using vector similarity. Finally, use statistical models like the beta distribution to model the uncertainty in this sparse engagement data, allowing teams to continuously learn user preferences and improve RAG relevance.

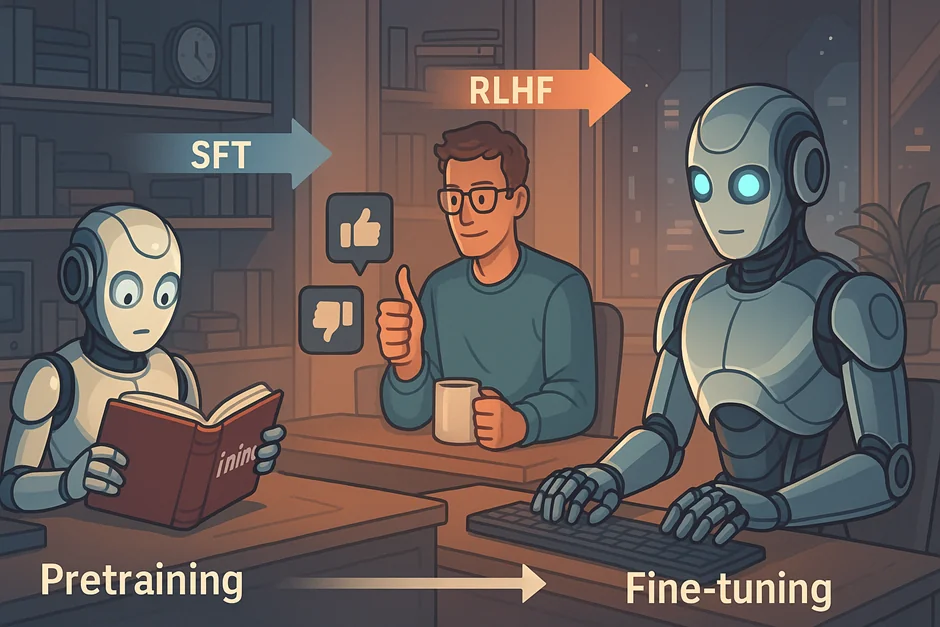

Fine-tuning LLMs with Reinforcement Learning (RL) enhances their alignment with human goals, especially after initial supervised training. The two-stage process begins with Supervised Fine-Tuning (SFT), where models learn from labeled instruction-response pairs, setting a strong behavioral baseline. RL then refines model outputs using reward signals—either from human feedback, heuristics, or metrics—by training a reward model to guide learning.

Policy gradient methods adjust the model’s output probabilities to favor higher-reward actions. However, they can suffer from instability, which is mitigated by techniques like advantage functions and KL-divergence constraints. Proximal Policy Optimization (PPO), a popular method used in systems like ChatGPT, limits policy updates to maintain training stability and performance. It uses a reward model to guide updates while avoiding drastic shifts, ensuring the model continues improving without regressing.

Key insight: “Increase probability of better-than-average responses, decrease others.” Techniques like PPO, DPO, OPRO, and GRPO are critical tools for scalable and reliable LLM fine-tuning via RL.

The ReAct (Reasoning and Acting) framework enhances AI decision-making by separating thought from action. Unlike methods like Chain-of-Thought, ReAct creates a dynamic loop: the model thinks, performs an action (e.g., a web search), observes the result, and refines its next thought. This iterative process mimics human reasoning, leading to more informed and precise outputs.

This approach, called "AI response chaining," allows an LLM to build upon sequential steps to solve complex queries. A provided Langchain example demonstrates a zero-shot agent using this Thought -> Action -> Observation cycle to research a topic, effectively reducing hallucinations and simulating critical thinking.

Key Takeaway: Developers can use ReAct to build more intelligent and reliable AI agents. While alternatives like Self-Consistency and Debate exist, ReAct's structured method of synergizing reasoning and acting is a powerful way to create more sophisticated AI tools.

AwesomeReviewers addresses a key challenge: valuable insights from code reviews are often lost once pull requests are merged. The project's core idea is to "treat code reviews as a data source for continual learning," systematically capturing a team's collective wisdom to create living standards.

The system uses an automated AI pipeline that scans a repository's PR history. It leverages Large Language Models (LLMs) to filter discussions for generalizable feedback, categorize them by topic (e.g., Security, Performance), and then synthesizes multiple related comments into a single, actionable guideline.

This process creates a dynamic library of coding standards, distilled from real-world discussions. The key takeaway is that teams can automate knowledge sharing, reinforcing best practices and creating a "living" set of guidelines that evolves with the codebase, ultimately closing the loop between review feedback and team-wide standards.

Small Language Models (SLMs) are positioned as the future of agentic AI, especially in systems where tasks are repetitive, specialized, and require minimal variation. While Large Language Models (LLMs) excel in general-purpose dialogue, the authors argue that SLMs offer a more efficient and economical alternative for agent-based architectures.

The paper highlights that many agentic systems don’t benefit from the full breadth of LLM capabilities, making smaller, targeted models more suitable. In scenarios requiring broader conversational range, heterogeneous systems using multiple models are proposed as optimal. The authors propose an LLM-to-SLM conversion algorithm to facilitate this shift.

“Even a partial shift from LLMs to SLMs is to have [a significant] operational and economic impact on the AI agent industry.” They call on the community to engage in further discourse, aiming to reduce the cost and complexity of deploying AI at scale.