Struggling with AI agent reliability? This week's digest delivers expert engineering patterns for scaling, mastering context engineering, and building robust LLM apps.

Have you ever built a promising AI agent, only to see it stumble on the last mile to production-grade reliability? You're not alone. As the initial novelty of generative AI wears off, a more sober reality is setting in across the industry. The conversation is shifting from the magic of prompting to the hard-nosed discipline of engineering. We're learning a crucial lesson: the most capable GenAI agents aren't born from a single perfect prompt but are forged through robust systems, meticulous control, and a deep understanding of context. In fact, as one of this week's featured pieces argues, most agent failures aren't model failures - they are context failures.

This week's digest dives deep into this engineering challenge, moving past the hype to deliver actionable strategies for building AI that works. We explore the "12-Factor Agent," a new methodology for creating dependable LLM applications by focusing on deterministic control flow. We then discuss how agents can interact with GUI, mouse and keyboard; and unpack why "Context Engineering" is replacing prompt engineering as the critical skill for developers. To see these principles in action, we look at how Bloomberg's Head of AI Engineering scales "semi-agentic" systems with non-negotiable guardrails for the high-stakes world of finance and how a new multi-model approach is revolutionizing scientific research.

Let's get building those modern-age agents.

Dex Horthy addresses the common challenge of building LLM agents that surpass 70-80% reliability, noting that many developers eventually abandon frameworks to gain more control. He proposes the '12-Factor Agent' methodology, a set of engineering patterns for robust LLM applications.

Horthy argues that most successful 'agents' are primarily deterministic software with LLMs strategically used for tasks like converting natural language into structured JSON. The key is for developers to own their prompts, context window construction, and control flow. This allows for more flexibility, better error handling, and the ability to integrate human-in-the-loop workflows seamlessly.

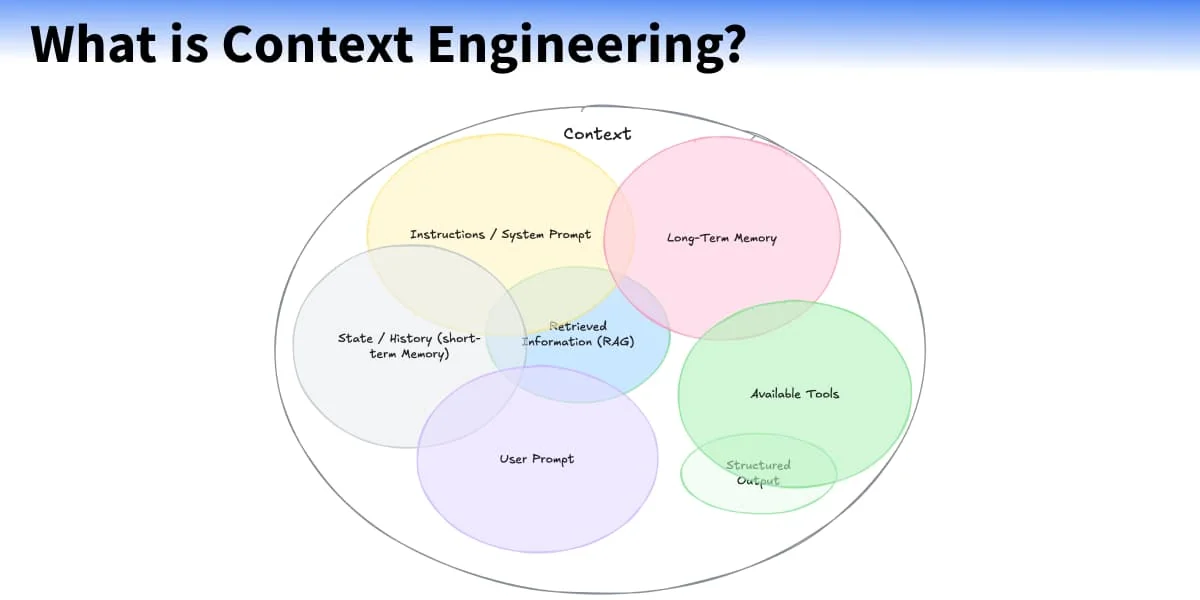

The crucial skill in AI development is rapidly evolving from simple prompt engineering to the more sophisticated discipline of Context Engineering. This approach argues that the success or failure of an AI agent depends less on the model's intelligence and more on the quality of the information it receives. As the article states, "most agent failures are not model failures... they are context failures." Context extends far beyond the user's immediate prompt to include system instructions, conversation history, long-term memory, retrieved data (RAG), and available tools. Providing this rich, dynamic context is what elevates an AI from a "cheap demo" to a "magical" and genuinely helpful assistant that can perform complex, personalized tasks.

The actionable takeaway is that building powerful AI agents requires a systemic approach. Context Engineering is defined as "the discipline of designing and building dynamic systems that provides the right information and tools, in the right format, at the right time." Instead of focusing on a perfect static prompt, developers must engineer systems that gather, format, and deliver all necessary information, like calendar data, user history, or API access - just before the LLM is called. Ultimately, the future of reliable and effective AI lies in this engineering discipline, making it the central challenge for creating truly capable agents.

Agent S is an open agentic framework designed to let AI agents use computers like humans, via mouse, keyboard, and direct GUI interaction. It aims to automate complex, multi-step desktop tasks such as data entry, scheduling, and document creation, while improving accessibility for users with disabilities. Built on the latest advances in Multimodal Large Language Models (MLLMs) like GPT-4o and Claude, Agent S tackles three major challenges: acquiring domain-specific knowledge, planning over long task horizons, and navigating dynamic, non-uniform interfaces.

To address these, Agent S introduces Experience-Augmented Hierarchical Planning, combining external web search and internal memory (narrative and episodic) to break down tasks into subtasks and adapt to changing software. A novel Agent-Computer Interface (ACI) further boosts reasoning and control by grounding visual input and using language-based action primitives like click(element_id) to improve precision and feedback handling during task execution.

Agent S achieves state-of-the-art performance on the OSWorld benchmark with an 83.6% relative improvement and generalizes well to other systems like WindowsAgentArena. Its modular design, memory-driven learning, and focus on real-world interfaces mark a major step toward human-level autonomy in computer interaction.

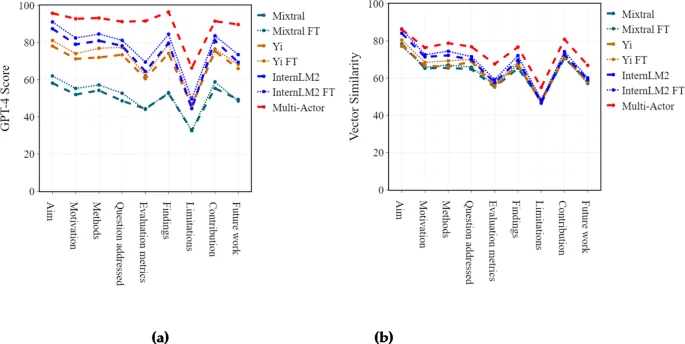

Extracting key insights from scientific papers is a challenge for anyone building RAG or LLM-powered research tools. This study introduces ArticleLLM, a system that uses fine-tuned open-source language models to efficiently pull out the most important findings from dense academic articles. Their results show that LLMs can boost both the quality and breadth of extracted insights, pointing the way for better, AI-driven knowledge discovery in real-world RAG applications.

Anju Kambadur outlines Bloomberg's approach to building and scaling generative AI agents. He details their current 'semi-agentic' architecture, which combines autonomous components with mandatory, non-negotiable guardrails to ensure precision, factuality, and compliance (e.g., preventing financial advice). This is crucial in the high-stakes finance domain where errors have an outsized impact.

The talk focuses on two key aspects of scaling. First, managing system fragility. Since LLM-based agents can compound errors, systems must be built with the assumption that upstream components are imperfect. This requires building resilient downstream systems with their own safety checks, enabling faster, independent evolution of each agent. Second, evolving organizational structure. Early-stage agent development benefits from small, vertically-integrated teams for fast iteration. As systems mature, organizations should create horizontal teams for common functions like guardrails to optimize and standardize across products.