Build more reliable AI. This week: master OpenAPI for agents, avoid the MLOps retraining trap, and learn from Meta's hardware reliability playbook.

As artificial intelligence moves from the lab to live production, the conversation is rapidly shifting from "what's possible" to "what's reliable." The initial thrill of a working prototype often gives way to the sobering reality of building robust, scalable, and predictable systems. This is where the real engineering begins-grappling with everything from silent hardware corruptions and flawed data pipelines to the subtle semantic gaps that can cause AI agents to fail spectacularly. The difference between a demo and a durable product lies in mastering these operational details.

This week's digest tackles these production-grade challenges head-on. We explore how to instantly turn any OpenAPI spec into a functional AI tool and, more importantly, why its semantic quality is the key to preventing agent failures. You'll also discover why the common "retraining reflex" in MLOps is often a costly mistake and get a look inside Meta's battle with silent hardware corruption at scale. To round it out, we provide a clear framework for deciding between flexible AI agents and reliable predefined workflows. These expert insights are designed to help you build more resilient systems and make smarter architectural decisions from the start.

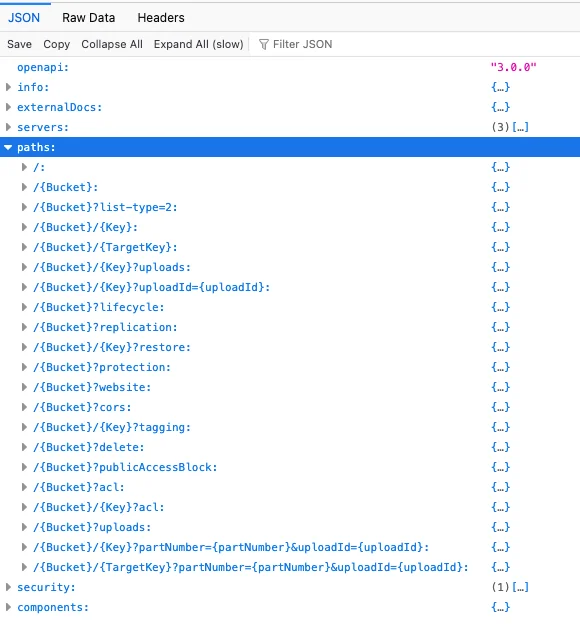

This guide demonstrates how to rapidly create a functional MCP server from any OpenAPI specification using Python and FastAPI. The server automatically parses an API definition (using IBM Cloud Object Storage as an example) and converts each API operation into a callable tool, eliminating the need for manual endpoint coding.

The core mechanism involves reading the OpenAPI JSON, dynamically creating tools for each path, and exposing them via /tools and /invoke endpoints. The system is designed to handle critical functions like authentication with IBM Cloud IAM and dynamic invocation of region-specific API endpoints.

The key takeaway is that developers can stand up a versatile MCP server for virtually any API with an OpenAPI spec. A client can then authenticate, get a token, and use tool_call messages to perform operations like listing or creating resources programmatically.

To effectively integrate with AI agents, the OpenAPI specification must serve as the single source of truth for API descriptions, bridging the gap between human developers and AI consumers. Standard specs often lack the semantic context required by LLMs, leading to a problematic "bifurcation" of documentation. The solution is to enrich OpenAPI docs to describe capabilities, not just technical contracts.

This involves using fields like summary and description for detailed purpose explanations, adopting action-oriented operationIds as tool names, and leveraging JSON-LD for deep semantic meaning. An enriched spec provides the necessary context for an AI to accurately select and use a tool. This allows for a direct, automated conversion from OpenAPI to protocols like MCP (Model-Controller-Protocol).

Failing to maintain high-quality specs can cause agent workflows to fail, lead to AI hallucinations, and introduce security risks like "tool poisoning" via malicious descriptions. This critical mapping can be handled by API gateways or AI-native tools, leveraging existing API investments for the AI-driven future.

This analysis argues against the "retraining reflex" in MLOps, where models are frequently retrained to fix performance drops. The author contends this is often a mistake, as the root cause is rarely stale model weights but rather "misunderstood signals" from flawed data, brittle assumptions, or obsolete features.

Blindly retraining can be harmful. The article cites examples where retraining on temporary anomalies, like a marketing campaign, caused a fraud model to flag good users. Similarly, another model optimized for misleading clicks after a UI change, degrading user satisfaction. Retraining can inject noise, hardcode incorrect assumptions, and mask silent decay when upstream data sources change (e.g., Meta deprecating metrics).

The key takeaway is to treat retraining as a surgical tool, not a routine task. A smarter strategy involves diagnosing the root cause first. Actionable advice includes fixing feature logic, monitoring post-prediction business KPIs, and tracking "model trust signals" like manual overrides. As the author notes, a well-maintained system is one where you can tell what is broken, not one where you simply keep replacing the parts.

Hardware failures are the dominant cause of AI training job interruptions (>66%) at Meta's scale. The primary challenge is silent data corruptions (SDCs)—insidious silicon defects producing incorrect results without error signals, occurring 1000x more frequently than cosmic ray faults.

To combat this, Meta uses a hybrid approach: 'pit stop' offline tests, 'ripple' in-production tests, and 'hardware sentiment' analytics that identify faulty hardware via application failure patterns. The talk concludes with a call for a comprehensive 'factory to fleet' industry approach to ensure reliability from silicon design to application.

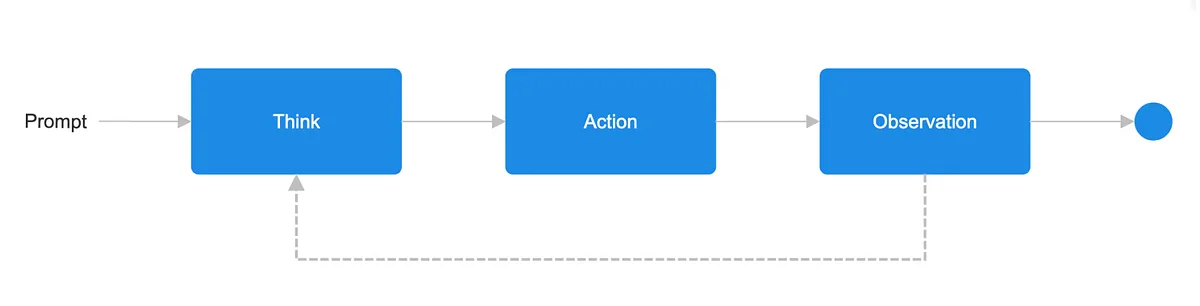

Choosing between AI agents and predefined workflows is crucial for effective automation. AI agents are autonomous systems that excel at handling unpredictable inputs and complex, multi-step decisions through a cycle of Thought → Action → Observation. They are ideal for applications like dynamic customer service bots or personalized marketing campaigns.

In contrast, predefined workflows follow fixed "if-then" logic, offering high reliability and efficiency for structured tasks. They are the superior choice for simple, repetitive processes like data entry or mission-critical systems such as financial transaction processing, where predictability is non-negotiable and resources may be constrained.

The key takeaway is to assess your task's needs. A core insight is the rule of thumb: "If your task requires adaptability, an AI agent may be the best choice. If your task demands strict reliability or efficiency, a predefined workflow may be the best choice." This helps balance flexibility with control.